|

Design Tooling - Design Machines |

||||||||

| |

||||||||

|

|

||||||||

|

Information |

Introduction |

|||||||

|

Background |

The 1981 paper "Design Machines" by George Stiny and L. March outlined the development of an autonomous system for creating designs. Their framework divides the design process into four mechanisms: Receptor, Effector, Language of Designs, and Design Theory. The receptor creates representations of external conditions and the effector stimulates external processes or artifacts from designs. According to Stiny and March, Receptors can be sensors or traditional input devices like a keyboard or a mouse. Effectors might be Printers, CNC machines, and even Robots. Together the receptor and effector determine the design context. The Language of Designs gives an account of the formal parameters of designs. Finally, the Design Theory describes the correspondence between the Language and the Context.

|

|||||||

|

||||||||

|

Weaver Bird |

||||||||

|

Implimentation |

|

|||||||

|

Perception |

Introduction |

|||||||

|

Images |

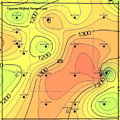

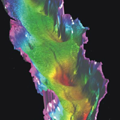

Image Types: Camera Images: Produced using a lens and a light sensitive receptor. Transmission Images: Produced when light is shone through an object. (ex. X-ray) Sonic Images: Reflection of Sound waves off an object. (ex. Medical Ultrsound) Radar Images: Tradiitonal Radar screen. Pressure Maps: Information from a grid of pressure sensors. Range Images: Matrix of distances to different objects in a space. (ex. Sonar)

Pixel-Based Approach 2D Scaned images and digital photographs can be

used to import information about context into a computational platform.

However, images can be produced with other means. These imported

images become digital objects when they are translated into a grid

of colored pixels. All image recognition and manipulation algorithms

rely on procedures which examine and manipulate images at a pixel

level.

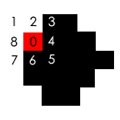

Getting Started: A good place to start is with operations on Bi-Level Images. Images containing only black pixels and white pixels are the easiest to interpret and manipulate. Thresholding is a means of converting a gray level or color image to a BI-level image. One of the ways to define objects in an image is through connectivity. The 'seed' method can identify a bounded object from a single pixel seed. The algorithm checks the neighbors of the seed and the neighbors' neighbors until it has found all the boundaries of the object. Once objects are defined in a image they can be interrogated through geometric operations such as area, perimeter, etc. Through a method called erosion, "extra" pixels can be removed from an image in order to produce cleaner images in which objects can be picked out more readily. Reference: J.R. Parker, Practical Computer

Vision Using C. John Wiley & Sons, New York 1949.

Shape-Based Approach: Shape Grammars, developed by G.Sting and J.Gips, stand as a critique of the vast majority of computational vision systems. Shape Grammars can be aligned with gesalt theories of perception in which compositions are more complex than the sum of their consitituent parts. According to Shape Grammarians, images are not composed of pixels. In fact the structure/complexity of an image is a function of the operations performed on it. Apart from its relience on shape, Shape Grammars does not adhere to any "fixed" means of decompositing images. Refernce: http://www.shapegrammar.org

(*) Topics for Discussion Many theorists, including Stiny, have argued that much of creative behavior is embedded in perception. "New" A.I. also claims that perception is inseparable from reasoning. Can designers benefit from discussing and implementing "ways of seeing" explicitly? How might new means of perception enabled by technology change the way that architects see their own role as designers of environments?

(**)Assignment Design a mode of perception which embodies desirable biases. Use this perceptive mechanism to interpret various artifacts and environments. |

|||||||

|

|

||||||||

|

|

|

|

|

|

|||

|

Shape Grammars How many ways can you decompose this shape? |

Pixelization |

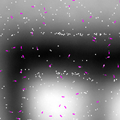

Camera Image |

Radar Image |

Transmission Image |

Range Image |

|||

|

Video |

Computer Vision using a WebCam by Josh Nimoy et

al. "What is Computer vision? Realtime video input digitally computed such that intelligent assertions can be made by interactive systems about people and things. Popular techniques in new media arts and sciences include the ability to detect movement and presence in spaces, appearance of objects or people, how many of them are there, which way it's facing, and edge path vectors. Myron brings computer vision to a growing number of interactive media development platforms, allowing cameras connected to your computer to control just about anything. This software aims to make computer vision easy and inexpensive for the people! Currently, it has more "tracking" functionality than other plugins with similar aim. " References: http://webcamxtra.sourceforge.net/ |

|||||||

|

|

|

||||||

|

Web Cam Still |

Pixelized |

Bubblized |

Vector Trace |

Tracking |

||||

|

Microworlds |

Introduction

|

|||||||

|

Cellular Automata |

Developed by John von Neumann(1903-1957). "Cellular

automata are discrete dynamical systems whose behavior is completely

specified in terms of a local relation. A cellular automaton can

be thought of as a stylized universe. Space is represented by a

uniform grid, with each cell containing a few bits of data; time

advances in discrete steps and the laws of the "universe"

are expressed in, say, a small lookup table, through which at each

step each cell computes its new state from that of its close neighbors.

Thus, the system's laws are local and uniform." (Brunel University

Artificial Intelligence Site)

Suggested Assignments |

|||||||

|

|

|

||||||

|

Simon Greenwold 2003 |

|

|||||||

|

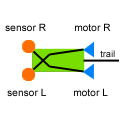

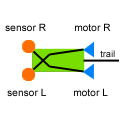

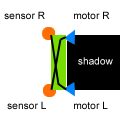

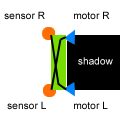

Braitenburg Vehicles |

Introduction

(1) left sensor to left motor / right sensor to

right motor

Vehicles respond to the environment

|

|||||||

|

Sticky Environments |

||||||||

|

|

|

|

|

||||

|

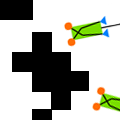

Yanni Loukissas 2004 |

Anatomy of a Braitenburg Vehicle |

|||||||

|

2D Vehicles, 3D Trails |

||||||||

|

|

|

|

|||||

|

Yanni Loukissas 2004 |

Preliminary Controls: Use "Shift" , "x" and "z" with the mouse to pan, zoom and rotate. |

|||||||

|

Shadow Constructor |

||||||||

|

|

|

|

|||||

|

Yanni Loukissas 2004 |

Preliminary Controls: Hold down "d" to draw. Press "q" to start the vehicles. Use "Shift" , "x" and "z" with the mouse to pan, zoom and rotate. |

|||||||

|

Advanced Shadow Constuctor |

||||||||

|

Version1 |

Version2 |

|||||||

|

|

|

|

|

||||

|

Yanni Loukissas 2004 |

Preliminary Controls: Hold down "d" to draw. Press "q" to start the vehicles. Use "Shift" , "x" and "z" with the mouse to pan, zoom and rotate. |

|||||||

|

Topics for Discussion

Suggested Assignments 1) Design an environment to accommodate a programmed vehicle (ex. painting with light) 2) Design a vehicle which will build a predetermined structure in response to a given environment. |

||||||||

|

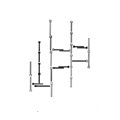

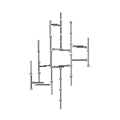

Design Games |

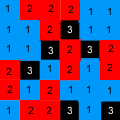

In this educational scenario, design machines will be situated as agents within the context of abstract design games. This strategy builds on research conducted in the 80's at the MIT department of architecture in which architectural design was explored through the metaphor of a game (Habraken 1987). The games developed at that time were entitled 'Concept Design Games.' They attempted to highlight some of the vital characteristics of the design activity by focusing on the interaction of designers. This scenario proposes the development of systems which can exhibit visual reasoning of the nature required to play 'Concept Design Games.' The initial game to be explored using architecture design machines is a variation of the silent game, developed by Habraken. In the silent game, designer/players build elaborate visual compositions through the use of patterns. This game highlights the implicit understandings that develop between designers in making and projecting patterns through visual compositions.

Topics for Discussion As human designers/players attempt to anticipate the behavior of the machine they become engaged in thinking about how design happens. The interaction between human and machine designer/players can be a controlled and informative opportunity for teachers and students alike to reflect on intuitive and mechanistic approaches to design.

Suggested Assignment 1) Play the silent game with a design machine and discuss the results 2) Develop a new concept design game and program a design machine to play it. |

|||||||

|

|

|

|

|

|

|||

|

Yanni Loukissas 2004 |

User intervention with the use of Braitenburg Vehicles. Diagrams from Concept Design Games by John Habraken and Mark Gross. |

|||||||